ReadingList :: Data Engineer (Lifelong Learning)

ReadingList :: Data Engineer (Lifelong Learning)

读书就像习武之人修炼内功,好的书可以使自己功力大大提升,就像拜师武林泰斗了;

如果能面对面学习,那就更厉害了!

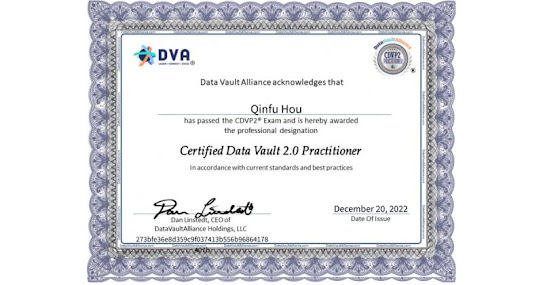

01 Building a Scalable Data Warehouse with Data Vault 2.0 [Paperback]

Reading Plan (to finish by 17/Dec)

Starting from 08/Oct/2023 ✅

15-Oct

- c1 ✅ 09/Oct

- c2 ✅ 10/Oct

- c3 ✅ 16/OCT

Notes:

- Kimball: two-layer data warehouse

- Inmon: three-layer data warehouse model

- Data sources: ERP, CRM, Files etc

- TDQM, DWQ

💪💪😄😄

22-Oct

- c4 ✅ 19/OCT

- c5 ✅ 22/OCT

Entity definitions (Hub, Link, Sat)

SAL (same-as link), various links, sats

29-Oct

- c6 ✅ 24/OCT

- c7 ✅ 26/OCT

Slow Changing Dimensions (SCD)

Star schemas

Snowflake design (indirect dimensions)

5-Nov

- c8 ✅ 02/Nov

- c9 ✅ 05/Nov

MDM

12-Nov

- c10 ✅ 27/NOV ✌

Metrics and Error Marts

19-Nov

- c11 ✅ 12/Nov

Data Extraction (stage loading: historical/batch)

26-Nov

- c12 ✅ 16/Nov

DV loading

3-Dec

- c13 ✅ 28/Nov

Data Quality

10-Dec

- c14 ✅ 01/Dec 😀😀😀

17-Dec

- c15 ✅ 17/OCT

Business users: Accessing Information Mart to build a multidimensional database

Merry Christmas!

SnowPro Core Certification: Data Transformations (5/8)

SnowPro Core Certification: Data Transformations (5/8)

Please subscribe YouTube Channel(请订阅油管频道): Data Driven Wealth 数说财富 DDW - YouTube

✅Transforming Data During a Load

Snowflake supports transforming data while loading it into a table using the COPY INTO <table> command, dramatically simplifying your ETL pipeline for basic transformations. This feature helps you avoid the use of temporary tables to store pre-transformed data when reordering columns during a data load. This feature applies to both bulk loading and Snowpipe.

The COPY command supports:

Column reordering, column omission, and casts using a SELECT statement. There is no requirement for your data files to have the same number and ordering of columns as your target table.

The ENFORCE_LENGTH | TRUNCATECOLUMNS option, which can truncate text strings that exceed the target column length.

While loading the data, complex transformations such as joins, filters, aggregations, and the use of FLATTEN are not supported as they are not essential data transformations. Therefore, joining, filtering, and aggregating the data are supported ONLY after the data has been loaded into a table.

The table stages do not allow basic transformations during the COPY process; thus, basic transformations may only be performed while loading data from external stages, named internal stages or user stages.

👉Supported File Formats

The following file format types are supported for COPY transformations:

CSV

JSON

Avro

ORC

Parquet

XML

To parse a staged data file, it is necessary to describe its file format:

The default format is character-delimited UTF-8 text. The default field delimiter is a comma character (,). The default record delimiter is the new line character. If the source data is in another format, specify the file format type and options.

When querying staged data files, the ERROR_ON_COLUMN_COUNT_MISMATCH option is ignored. There is no requirement for your data files to have the same number and ordering of columns as your target table.

To transform JSON data during a load operation, you must structure the data files in NDJSON (“Newline delimited JSON”) standard format; otherwise, you might encounter the following error:

Error parsing JSON: more than one document in the input

Specify the format type and options that match your data files.

To explicitly specify file format options, set them in one of the following ways:

Querying staged data files using a SELECT statement: |

|

Loading columns from staged data files using a COPY INTO <table> statement: |

|

👉Parameters in copy into

If TRUE, the COPY statement produces an error if a loaded string exceeds the target column length.

If FALSE, strings are automatically truncated to the target column length.

TRUNCATECOLUMNS:

If TRUE, strings are automatically truncated to the target column length.

If FALSE, the COPY statement produces an error if a loaded string exceeds the target column length.

If TRUE, strings are automatically truncated to the target column length.

If FALSE, the COPY statement produces an error if a loaded string exceeds the target column length.

Featured Posts

SnowPro Badges and Certificates

SnowPro Badges and Certificates Online Verification https://achieve.snowflake.com/profile/richardhou888/wallet

Popular Posts Recommended

-

SnowPro Core Certification: Data Loading and Unloading (4/8) ✅warehouse and parallel loading XS sized warehouse can load eight files paralle...

-

Please generate a smiling border collie with this pic Not bad: 🔝border collie 💚sunset

-

Basic UNIX commands Know more about a cmd use $man command Directory pwd Show your Present Working Directory mkdir Make a directory cd dirna...

-

SnowPro Core Certification: 通过考试和下一步(7/8)【本系列完结】 感谢看到这系列的最后一篇💗💗💗💗💗💗💗 欢迎点赞,评论,和转发! 这一系列主要是我学习考证SnowPro Core Certification的总结。把分散在Snowf...

-

How to train a border collie of 5-month old Certainly! Training a 5-month-old Border Collie can be both rewarding and fun. These intelligent...

-

SnowPro Core Certification: Performance Concepts (3/8) ✅ Performance Snowflake's unique architecture and the underlying micro-partitions...